Impacts in the short- and long-term

March 25, 2024

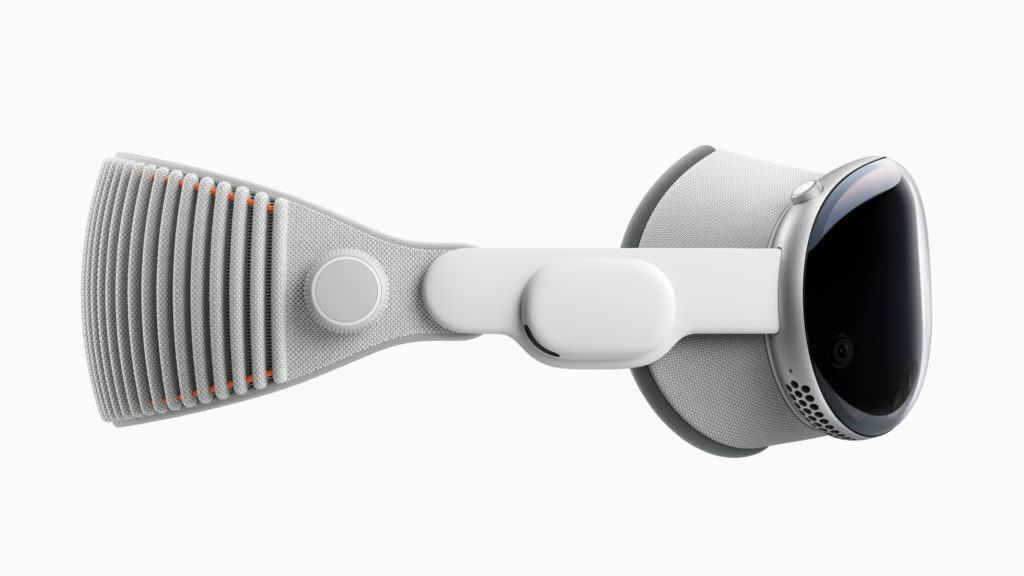

Apple’s latest hot gadget, the Apple Vision Pro, has been the subject of more video reviews than you could possibly imagine. MKBHD and Casey Neistat got in early, and many, many others have followed. While this is obviously a v1 product, and far too expensive to see mainstream adoption, there are potential impacts on our current lives as video production professionals. In the longer term, you might want to explore immersive or 3D production — and I’m certainly looking forward to it — but in the short term, there are benefits for regular 2D productions too. Let’s take a deeper look.

A brief overview

If you’re not familiar with the Apple Vision Pro, it’s a spatial computing device with very nice displays, eye tracking support and a clean user interface that starts at $3499. If you start by thinking of every VR headset you’ve ever seen, then make the screens higher resolution, de-emphasize gaming, remove the controllers, and integrate it with the Apple ecosystem, you’re most of the way there.

For some, it’s an uncomfortable toy, and for others, it’s the joyous future of computing. Polarization aside, it can’t go mainstream until the price comes down, but serious video production can cost serious money, so how can this thing shift the needle for us? The most obvious thing would be to inspire us to create video content especially for the device, and while there are a few ways to do that today, it’s not easy to produce high quality immersive content yet. Very few other VR headsets have offered the resolution that the Apple Vision Pro does, so the bar has certainly been raised. In fact, Canon are on record: there is no camera with enough resolution for it.

Either way, one of the key features of the device is watching video on it. Virtual movie screens can be as big as you wish, in a real movie theater or outdoors above a lake if you wish. 3D is fully supported, and comes for free with many movies on Disney+ or purchased through iTunes. So, yes, finally, there’s a good way to deliver 3D movies to (well-heeled) consumers.

So, is it easy to record 3D video today? Actually, yes.

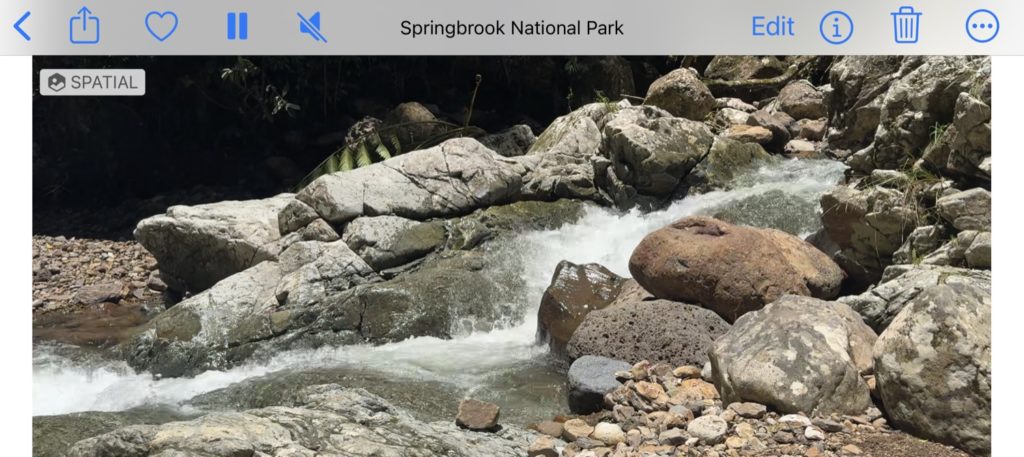

Starting out with Spatial Video

If you have an iPhone 15 Pro or Pro Max, you can shoot 3D “spatial” video now, but without an Apple Vision Pro, you won’t be able to easily view that content in 3D. In Settings > Camera > Formats, scroll down and turn on “Spatial Video for Apple Vision Pro”, then in the Camera app you’ll see a headset icon. Press this, shoot in Landscape, and you’re good to go — in 1080p30, without Log, and with tedious editing workflows. But it’s free, and a good way to decide if it’s worth investing in higher-end gear and getting ahead of the curve. You can also record video in the Apple Vision Pro itself, which records in a square 2Kx2K format.

It’s important to note that while the iPhone 15 Pro and Apple Vision Pro capture in 3D, they use a similar field of view to regular 1x iPhone video — this is not 3D 180° immersive video. Spatial Videos use a new “3D in a fuzzy box” format, and you’ll see a subtle parallax effect around the edges if you move around the frame, but it’s not a full-on immersive experience.

But that can be a good thing too — it’s 3D, framed in a similar way to regular 2D videos, and because we’re adding 3D without changing the way we frame and edit too much, that’s potentially pretty exciting for filmmakers.

So, can we step the quality up a notch? Sadly, not easily.

3D non-immersive cameras are rare

Right now, there just aren’t many pre-made options for non-immersive 3D production, because 3D TVs aren’t being made and there hasn’t been a distribution strategy for anything short of feature films. Hopefully this will change, but if you want high-end non-immersive 3D video today, you can sync two identical cameras, or use a beam splitter to record two lenses onto a single high-resolution camera sensor (cutting your resolution in half). While there are a couple of 3D lens options for interchangeable lens cameras, such as the Panasonic Lumix 12.5mm F12 3D lens and the Loreo 3D 9005, these simply aren’t good enough for general production purposes.

So what does the high end do on-set to capture 3D? Mostly, they don’t — it’s more common for the 3D version of a feature film to be created from a single camera source than from a two-lens system. This “fakery” doesn’t always produce bad results, and in fact, creating the 3D in post can avoid issues which can occur when “doing it properly”. For a whole lot more detail on stereo video production issues, read this excellent post.

So, maybe you want to create not just 3D video, but immersive content? That, you can do.

Professional 3D 180° cameras

Newer pro-budget 3D cameras have largely been focused on 3D 180° productions, because that’s been the sweet spot for immersive delivery to VR headsets, and it’s a compelling option for some kinds of projects. At the higher end, if you have an Canon EOS R5, there’s a dual-fisheye 3D RF lens for $1999. The Z CAM K1 Pro has been around for a little while, and there’s also the Calf Professional 6K 3D VR180 camera, coming out this month, that’s potentially a serious contender.

However — though an immersive production can look fantastic, with emotional impact well beyond what a 2D image can deliver, shooting in 180° puts constraints on the filming process and the end product. If you move the camera at all, those movements have to be slow and clean. Edits and transitions have to be carefully planned. Audio production needs to move beyond stereo. None of this is insurmountable, but it adds up.

Also, working with any kind of 3D brings a number of additional headaches. Are your subjects at a suitable distance for a good 3D effect — not so close that they’re distracting, nor so far away that there’s no sense of depth? Are you doing any kind of VFX, because that job just got way more than twice as difficult. And if you’re telling a story, do the shots flow together well when viewed in 3D, or do quick cuts and sudden perspective changes make some viewers sick?

So, if you’re happy with those constraints, is it worth considering 360° capture instead? It depends on the project, but maybe, sure.

360° options

All the constraints of 180° capture apply with bells on for 360° capture — if you can see the camera, it can see you. Yes, viewers have the freedom to look around, but it’s hard to hide the crew and you don’t know where viewers are looking. Resolution is also a massive issue, because you need a lot of dots to record the whole image sphere.

This is likely the reason Apple have never ever shown 360° video playback on the Apple Vision Pro, instead showcasing their own 3D 180° immersive content. Still, if you’ve got the budget and a vision, the best mainstream option is the Insta360 Titan, which can capture 11K in 2D or 10K in 3D.

So what about post?

Post-production considerations

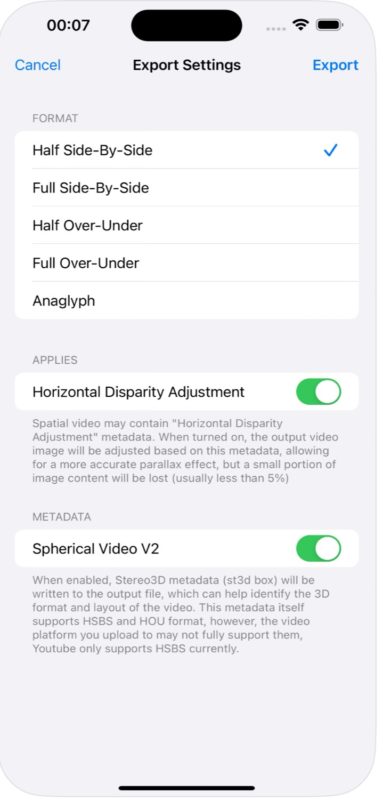

Many NLEs support 180° and 360° immersive projects, and while 3D is often supported as a subset of that, non-immersive 3D support is less common. DaVinci Resolve Studio has full support today, and Final Cut Pro will be adding Spatial Video support this year. But as 3D can simply be placed side-by-side in a wide frame, it’s possible to perform basic editing in just about any NLE, as long as you’re comfortable with a little pre- and post-processing.

Apple’s spatial video uses MV-HEVC, an extension to the HEVC standard, to (at least potentially) encode the two stereo streams of video more efficiently. If your software can’t deal with this directly, you’ll have to use an app like Spatialilify to convert to an older, more compatible format like Side-By-Side or Over-Under, where the two streams are simply placed adjacent to one another in a wide or tall frame. This can be edited more or less normally, and eventually converted into whatever format you need to deliver.

VFX and roto is where this gets truly tricky, though, because you’re not just doing the same work twice — it has to be seamless across both eyes. You’ll really need your software to be 3D-aware to get the job done right, and you won’t be able to get away with all the tricks you might employ in 2D. This topic gets complex fast, so I won’t go too far down this rabbit hole. If you want to learn more, be sure that you’re following up-to-date tutorials; 3D has had a few resurgences over the past decade, and it’s easy to find tutorials from ten years back. Hugh Huo has a ton of current content if you’re looking for a good starting point.

So 3D isn’t really “easy” yet, though it could become more popular eventually. What about 2D production?

Using the Apple Vision Pro as a 2D production tool

Today, one of the main strengths of the Apple Vision Pro is that it can give the wearer a private video feed, at any size, with excellent quality, wherever they are, while also seeing their surroundings. Most iOS and iPad camera apps can run directly on the Apple Vision Pro, and if those apps can hook directly into video feeds from their connected devices, that’s an easy way to go. If that fails, it’s always possible to share a Mac’s screen directly, so a Mac with a capture card can put any video feed into your environment. There’s also an app called Bezel which can send an iOS device’s screen directly into the Apple Vision Pro.

While it’s obvious that virtual production can benefit from a 3D view through a headset, the possibilities in a regular production environment are pretty tantalizing. If you’re a drone operator, that means you can see the view from your drone at the same time as you can see the drone itself, even in direct sunlight. It’s not like an FPV drone with goggles, because it doesn’t take over your perspective entirely — the drone’s image sits in your normal view. Here’s one early adopter, and another. Outside drones, solo operators of all kinds suddenly have access to a large virtual monitor as they shoot, and being able to see all the angles from a multi-camera shoot at once can avoid real hassles later.

If you’re a director, you don’t have to stay in video village, but can stand right next to the set, seeing the actors in person alongside live camera feeds. If you’re a DOP or camera operator, you don’t have to share a small display with the director, but instead, you can view a large display from anywhere you want. This video from Hugh Hoo has plenty of great tips:

For best results, hook up a wireless-capable monitor like the Hollyland M1 Enhanced on-set monitor, as seen above. You may have to allow for a little lag, so pulling focus might not be ideal, but your virtual screen will be much nicer and larger than most people ever get to use on set.

Crucially though, it’s not just a video feed that you can keep in view alongside the real world. You can show a script, be in a video chat with remote collaborators, display reference shots for color matching, continuity or inspiration, see urgent messages delivered privately and silently, and show anything else you want to keep in view. If your lights are app-controllable, keep that app in there too.

Camera-to-cloud could enable a quick, instant review of anything today, but how about a live feed from an editor rough-cutting the last few scene together as you’re watching the next shot being recorded? How about a feed from the on-set colorist showing you what they’ve been able to do so far? What if a future app could place the lines of a script next to the characters about to speak them? Apps on other devices can do some of these tricks, but certainly not all, and industry tech companies are preparing solutions.

We’ve never had anything like this before, and we’re right at the start. Change isn’t always popular, but there’s a real opportunity for large and small productions to use the Apple Vision Pro for tasks that previous VR headsets just couldn’t help with. Either their screens weren’t good enough, or their passthrough was terrible, or you had to use controllers, or all of these things. It’s fantastic to see something new, and to be able to see how much better it’s going to get.

Conclusion

It seems likely that a future version of the Apple Vision Pro (or some future competitor) will enable some kind of holodeck-like experience, and I look forward to eventually retiring in a virtual world. But as imperfect as today’s device may be, it can dovetail into enough of our existing workflows to become a useful part of them now. While the future of immersive filmmaking is brighter than it was last year, it’ll be a while before it goes mainstream. In the meantime, regular filmmaking, at high or low budgets, can start to benefit.

Finally, something that’s not just a slightly better version of last year’s gadget. Interesting times indeed.

Support ProVideo Coalition

Shop with

Filmtools

Filmmakers go-to destination for pre-production, production & post production equipment!